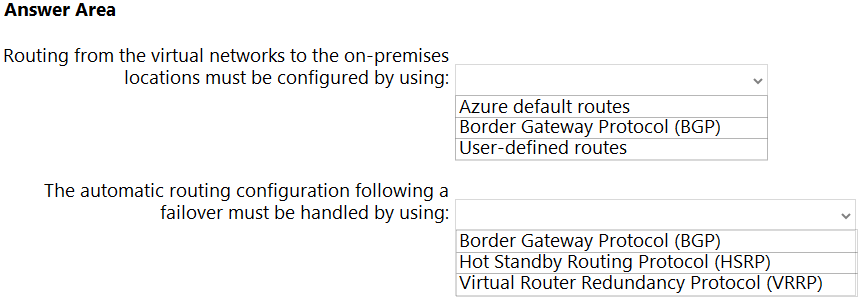

HOTSPOT -

Your company has two on-premises sites in New York and Los Angeles and Azure virtual networks in the East US Azure region and the West US Azure region.

Each on-premises site has ExpressRoute Global Reach circuits to both regions.

You need to recommend a solution that meets the following requirements:

✑ Outbound traffic to the internet from workloads hosted on the virtual networks must be routed through the closest available on-premises site.

✑ If an on-premises site fails, traffic from the workloads on the virtual networks to the internet must reroute automatically to the other site.

What should you include in the recommendation? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

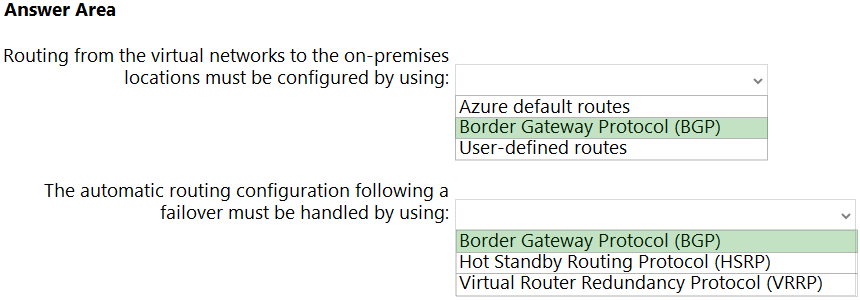

Answer:

Box 1: Border Gateway Protocol (BGP)

An on-premises network gateway can exchange routes with an Azure virtual network gateway using the border gateway protocol (BGP). Using BGP with an Azure virtual network gateway is dependent on the type you selected when you created the gateway. If the type you selected were:

ExpressRoute: You must use BGP to advertise on-premises routes to the Microsoft Edge router. You cannot create user-defined routes to force traffic to the

ExpressRoute virtual network gateway if you deploy a virtual network gateway deployed as type: ExpressRoute. You can use user-defined routes for forcing traffic from the Express Route to, for example, a Network Virtual Appliance.

Box 2: Border Gateway Protocol (BGP)

Incorrect:

Microsoft does not support HSRP or VRRP for high availability configurations.

Reference:

https://docs.microsoft.com/ja-jp/azure/expressroute/designing-for-disaster-recovery-with-expressroute-privatepeering https://docs.microsoft.com/en-us/azure/expressroute/expressroute-routing

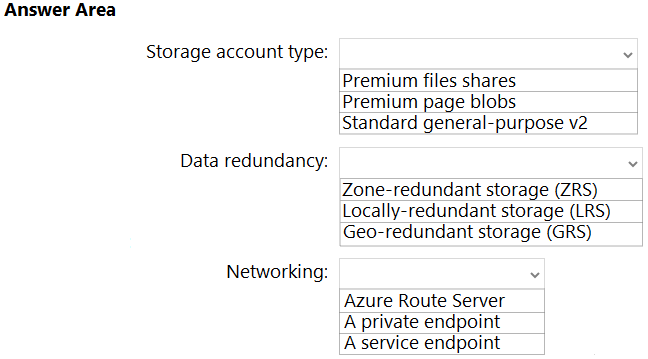

HOTSPOT -

You are designing an application that will use Azure Linux virtual machines to analyze video files. The files will be uploaded from corporate offices that connect to

Azure by using ExpressRoute.

You plan to provision an Azure Storage account to host the files.

You need to ensure that the storage account meets the following requirements:

✑ Supports video files of up to 7 TB

✑ Provides the highest availability possible

✑ Ensures that storage is optimized for the large video files

✑ Ensures that files from the on-premises network are uploaded by using ExpressRoute

How should you configure the storage account? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

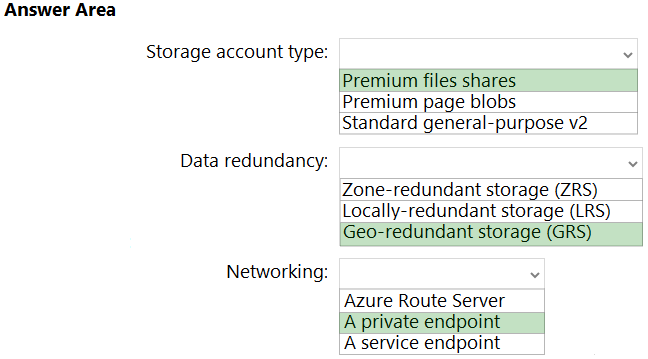

Answer:

Box 1: Premium page blobs -

The maximum size for a page blob is 8 TiB.

Incorrect:

Not Premium file shares:

Max file size for Standard and Premium file shares are 4 TB.

Box 2: Geo-redundant storage (GRS)

GRS provides additional redundancy for data storage compared to LRS or ZRS.

Box 3: A private endpoint -

Azure Private Link allows you to securely link Azure PaaS services to your virtual network using private endpoints. For many services, you just set up an endpoint per resource. This means you can connect your on-premises or multi-cloud servers with Azure Arc and send all traffic over an Azure ExpressRoute or site-to-site

VPN connection instead of using public networks.

Reference:

https://docs.microsoft.com/en-us/rest/api/storageservices/understanding-block-blobs--append-blobs--and-page-blobs https://docs.microsoft.com/en-us/azure/storage/files/storage-files-scale-targets https://docs.microsoft.com/en-us/azure/azure-arc/servers/private-link-security

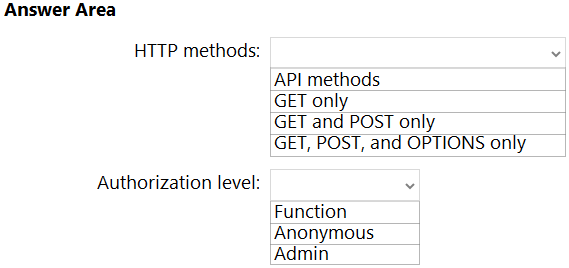

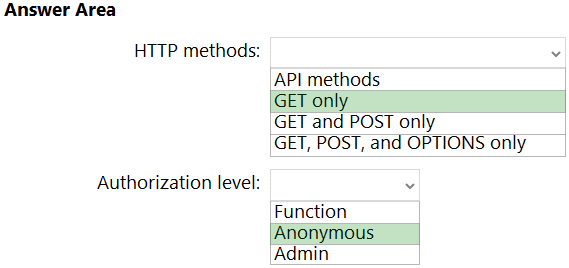

HOTSPOT -

A company plans to implement an HTTP-based API to support a web app. The web app allows customers to check the status of their orders.

The API must meet the following requirements:

✑ Implement Azure Functions.

✑ Provide public read-only operations.

✑ Prevent write operations.

You need to recommend which HTTP methods and authorization level to configure.

What should you recommend? To answer, configure the appropriate options in the dialog box in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: GET only -

Get for read-only-

Box 2: Anonymous -

Anonymous for public operations.

You have an Azure subscription.

You need to recommend a solution to provide developers with the ability to provision Azure virtual machines. The solution must meet the following requirements:

✑ Only allow the creation of the virtual machines in specific regions.

✑ Only allow the creation of specific sizes of virtual machines.

What should you include in the recommendation?

Answer:

B

Azure Policies allows you to specify allowed locations, and allowed VM SKUs.

Reference:

https://docs.microsoft.com/en-us/azure/governance/policy/tutorials/create-and-manage

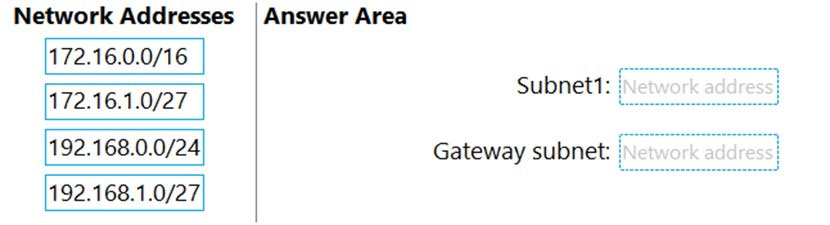

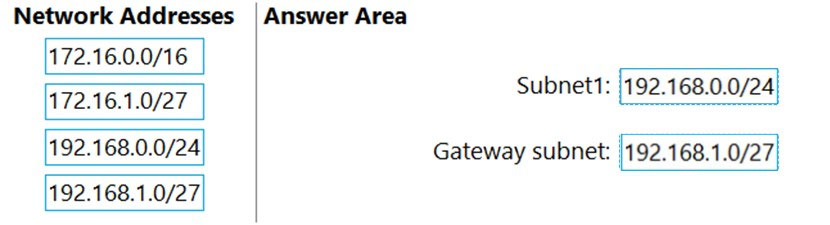

DRAG DROP -

You have an on-premises network that uses an IP address space of 172.16.0.0/16.

You plan to deploy 30 virtual machines to a new Azure subscription.

You identify the following technical requirements:

✑ All Azure virtual machines must be placed on the same subnet named Subnet1.

✑ All the Azure virtual machines must be able to communicate with all on-premises servers.

✑ The servers must be able to communicate between the on-premises network and Azure by using a site-to-site VPN.

You need to recommend a subnet design that meets the technical requirements.

What should you include in the recommendation? To answer, drag the appropriate network addresses to the correct subnets. Each network address may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

Answer:

You have data files in Azure Blob Storage.

You plan to transform the files and move them to Azure Data Lake Storage.

You need to transform the data by using mapping data flow.

Which service should you use?

Answer:

C

You can copy and transform data in Azure Data Lake Storage Gen2 using Azure Data Factory or Azure Synapse Analytics.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-data-lake-storage

You have an Azure subscription.

You need to deploy an Azure Kubernetes Service (AKS) solution that will use Windows Server 2019 nodes. The solution must meet the following requirements:

✑ Minimize the time it takes to provision compute resources during scale-out operations.

✑ Support autoscaling of Windows Server containers.

Which scaling option should you recommend?

Answer:

C

Deployments can scale across AKS with no delay as cluster autoscaler deploys new nodes in your AKS cluster.

Note: AKS clusters can scale in one of two ways:

* The cluster autoscaler watches for pods that can't be scheduled on nodes because of resource constraints. The cluster then automatically increases the number of nodes.

* The horizontal pod autoscaler uses the Metrics Server in a Kubernetes cluster to monitor the resource demand of pods. If an application needs more resources, the number of pods is automatically increased to meet the demand.

Incorrect:

Not D: If your application needs to rapidly scale, the horizontal pod autoscaler may schedule more pods than can be provided by the existing compute resources in the node pool. If configured, this scenario would then trigger the cluster autoscaler to deploy additional nodes in the node pool, but it may take a few minutes for those nodes to successfully provision and allow the Kubernetes scheduler to run pods on them.

Reference:

https://docs.microsoft.com/en-us/azure/aks/cluster-autoscaler

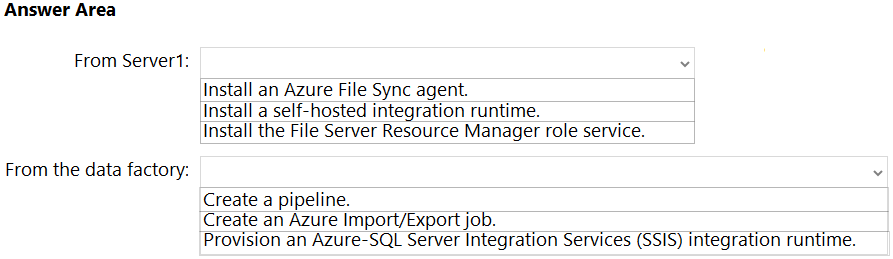

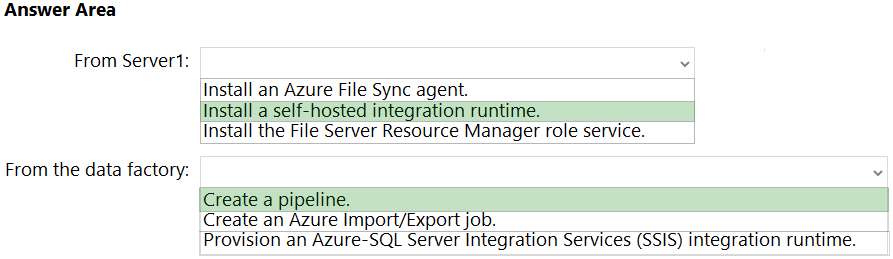

HOTSPOT -

Your on-premises network contains a file server named Server1 that stores 500 GB of data.

You need to use Azure Data Factory to copy the data from Server1 to Azure Storage.

You add a new data factory.

What should you do next? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: Install a self-hosted integration runtime.

If your data store is located inside an on-premises network, an Azure virtual network, or Amazon Virtual Private Cloud, you need to configure a self-hosted integration runtime to connect to it.

The Integration Runtime to be used to connect to the data store. You can use Azure Integration Runtime or Self-hosted Integration Runtime (if your data store is located in private network). If not specified, it uses the default Azure Integration Runtime.

Box 2: Create a pipeline.

You perform the Copy activity with a pipeline.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/connector-file-system

You have an Azure subscription.

You need to recommend an Azure Kubernetes Service (AKS) solution that will use Linux nodes. The solution must meet the following requirements:

✑ Minimize the time it takes to provision compute resources during scale-out operations.

✑ Support autoscaling of Linux containers.

✑ Minimize administrative effort.

Which scaling option should you recommend?

Answer:

C

To rapidly scale application workloads in an AKS cluster, you can use virtual nodes. With virtual nodes, you have quick provisioning of pods, and only pay per second for their execution time. You don't need to wait for Kubernetes cluster autoscaler to deploy VM compute nodes to run the additional pods. Virtual nodes are only supported with Linux pods and nodes.

Reference:

https://docs.microsoft.com/en-us/azure/aks/virtual-nodes

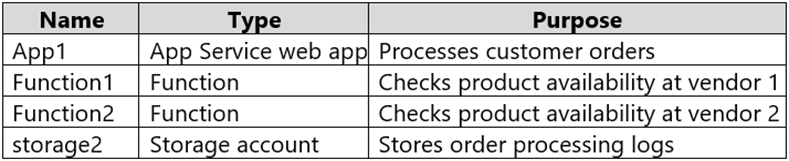

You are designing an order processing system in Azure that will contain the Azure resources shown in the following table.

The order processing system will have the following transaction flow:

✑ A customer will place an order by using App1.

✑ When the order is received, App1 will generate a message to check for product availability at vendor 1 and vendor 2.

✑ An integration component will process the message, and then trigger either Function1 or Function2 depending on the type of order.

✑ Once a vendor confirms the product availability, a status message for App1 will be generated by Function1 or Function2.

✑ All the steps of the transaction will be logged to storage1.

Which type of resource should you recommend for the integration component?

Answer:

B

Azure Data Factory is the platform is the cloud-based ETL and data integration service that allows you to create data-driven workflows for orchestrating data movement and transforming data at scale. Using Azure Data Factory, you can create and schedule data-driven workflows (called pipelines) that can ingest data from disparate data stores.

Data Factory contains a series of interconnected systems that provide a complete end-to-end platform for data engineers.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/introduction