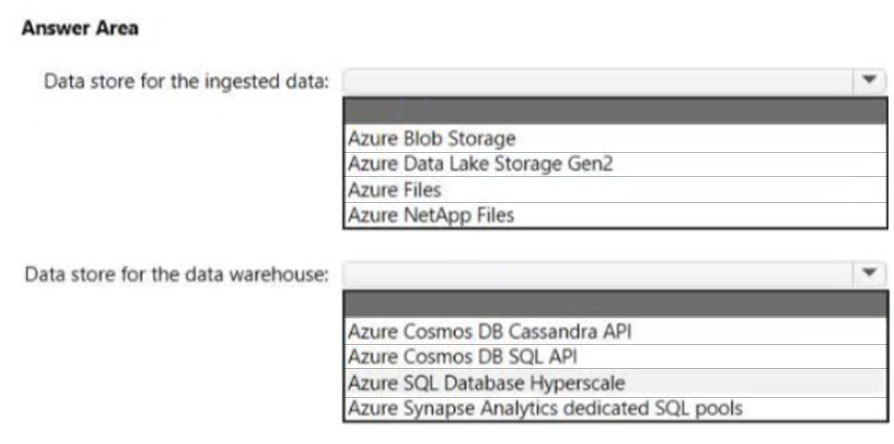

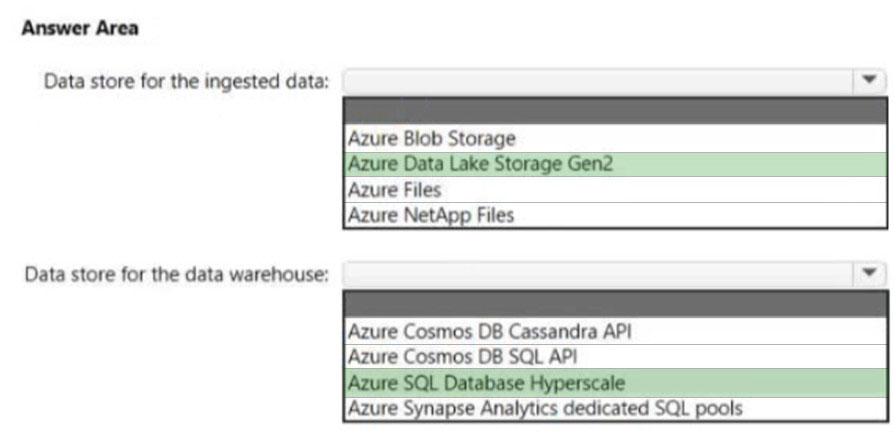

HOTSPOT -

You are designing a data storage solution to support reporting.

The solution will ingest high volumes of data in the JSON format by using Azure Event Hubs. As the data arrives, Event Hubs will write the data to storage. The solution must meet the following requirements:

✑ Organize data in directories by date and time.

✑ Allow stored data to be queried directly, transformed into summarized tables, and then stored in a data warehouse.

✑ Ensure that the data warehouse can store 50 TB of relational data and support between 200 and 300 concurrent read operations.

Which service should you recommend for each type of data store? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: Azure Data Lake Storage Gen2

Azure Data Explorer integrates with Azure Blob Storage and Azure Data Lake Storage (Gen1 and Gen2), providing fast, cached, and indexed access to data stored in external storage. You can analyze and query data without prior ingestion into Azure Data Explorer. You can also query across ingested and uningested external data simultaneously.

Azure Data Lake Storage is optimized storage for big data analytics workloads.

Use cases: Batch, interactive, streaming analytics and machine learning data such as log files, IoT data, click streams, large datasets

Box 2: Azure SQL Database Hyperscale

Azure SQL Database Hyperscale is optimized for OLTP and high throughput analytics workloads with storage up to 100TB.

A Hyperscale database supports up to 100 TB of data and provides high throughput and performance, as well as rapid scaling to adapt to the workload requirements. Connectivity, query processing, database engine features, etc. work like any other database in Azure SQL Database.

Hyperscale is a multi-tiered architecture with caching at multiple levels. Effective IOPS will depend on the workload.

Compare to:

General purpose: 500 IOPS per vCore with 7,000 maximum IOPS

Business critical: 5,000 IOPS with 200,000 maximum IOPS

Incorrect:

* Azure Synapse Analytics Dedicated SQL pool.

Max database size: 240 TB -

A maximum of 128 concurrent queries will execute and remaining queries will be queued.

Reference:

https://docs.microsoft.com/en-us/azure/data-explorer/data-lake-query-data https://docs.microsoft.com/en-us/azure/azure-sql/database/service-tier-hyperscale https://docs.microsoft.com/en-us/azure/synapse-analytics/sql-data-warehouse/sql-data-warehouse-service-capacity-limits

You have an app named App1 that uses an on-premises Microsoft SQL Server database named DB1.

You plan to migrate DB1 to an Azure SQL managed instance.

You need to enable customer managed Transparent Data Encryption (TDE) for the instance. The solution must maximize encryption strength.

Which type of encryption algorithm and key length should you use for the TDE protector?

Answer:

A

You are planning an Azure IoT Hub solution that will include 50,000 IoT devices.

Each device will stream data, including temperature, device ID, and time data. Approximately 50,000 records will be written every second. The data will be visualized in near real time.

You need to recommend a service to store and query the data.

Which two services can you recommend? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

Answer:

CD

HOTSPOT

-

You are planning an Azure Storage solution for sensitive data. The data will be accessed daily. The dataset is less than 10 GB.

You need to recommend a storage solution that meets the following requirements:

• All the data written to storage must be retained for five years.

• Once the data is written, the data can only be read. Modifications and deletion must be prevented.

• After five years, the data can be deleted, but never modified.

• Data access charges must be minimized.

What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer:

HOTSPOT

-

You are designing a data analytics solution that will use Azure Synapse and Azure Data Lake Storage Gen2.

You need to recommend Azure Synapse pools to meet the following requirements:

• Ingest data from Data Lake Storage into hash-distributed tables.

• Implement query, and update data in Delta Lake.

What should you recommend for each requirement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer:

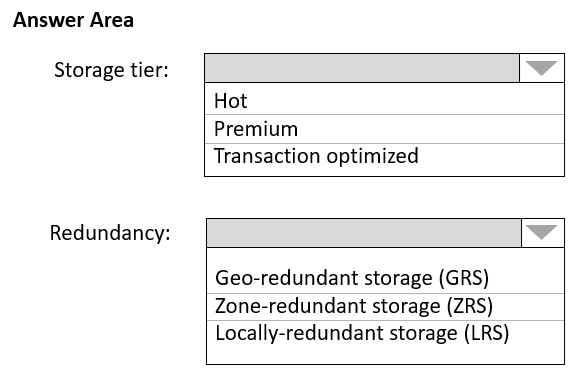

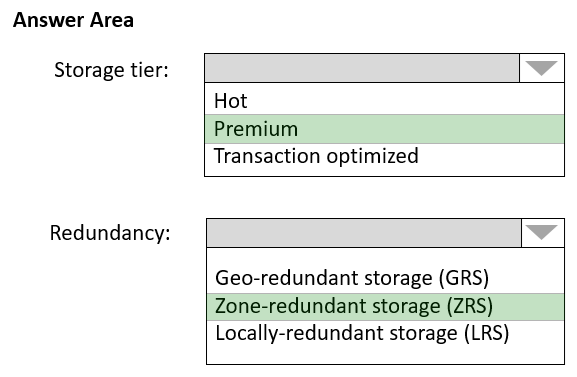

HOTSPOT -

You plan to create an Azure Storage account that will host file shares. The shares will be accessed from on-premises applications that are transaction intensive.

You need to recommend a solution to minimize latency when accessing the file shares. The solution must provide the highest-level of resiliency for the selected storage tier.

What should you include in the recommendation? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: Premium -

Premium: Premium file shares are backed by solid-state drives (SSDs) and provide consistent high performance and low latency, within single-digit milliseconds for most IO operations, for IO-intensive workloads.

Incorrect Answers:

✑ Hot: Hot file shares offer storage optimized for general purpose file sharing scenarios such as team shares. Hot file shares are offered on the standard storage hardware backed by HDDs.

✑ Transaction optimized: Transaction optimized file shares enable transaction heavy workloads that don't need the latency offered by premium file shares.

Transaction optimized file shares are offered on the standard storage hardware backed by hard disk drives (HDDs). Transaction optimized has historically been called "standard", however this refers to the storage media type rather than the tier itself (the hot and cool are also "standard" tiers, because they are on standard storage hardware).

Box 2: Zone-redundant storage (ZRS):

Premium Azure file shares only support LRS and ZRS.

Zone-redundant storage (ZRS): With ZRS, three copies of each file stored, however these copies are physically isolated in three distinct storage clusters in different Azure availability zones.

Reference:

https://docs.microsoft.com/en-us/azure/storage/files/storage-files-planning

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You need to deploy resources to host a stateless web app in an Azure subscription. The solution must meet the following requirements:

✑ Provide access to the full .NET framework.

✑ Provide redundancy if an Azure region fails.

✑ Grant administrators access to the operating system to install custom application dependencies.

Solution: You deploy an Azure virtual machine scale set that uses autoscaling.

Does this meet the goal?

Answer:

B

Instead, you should deploy two Azure virtual machines to two Azure regions, and you create a Traffic Manager profile.

Note: Azure Traffic Manager is a DNS-based traffic load balancer that enables you to distribute traffic optimally to services across global Azure regions, while providing high availability and responsiveness.

Reference:

https://docs.microsoft.com/en-us/azure/traffic-manager/traffic-manager-overview

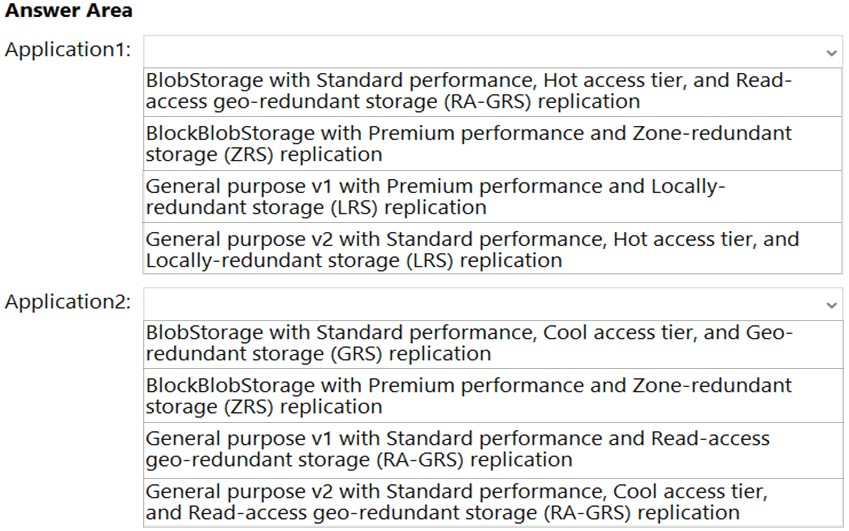

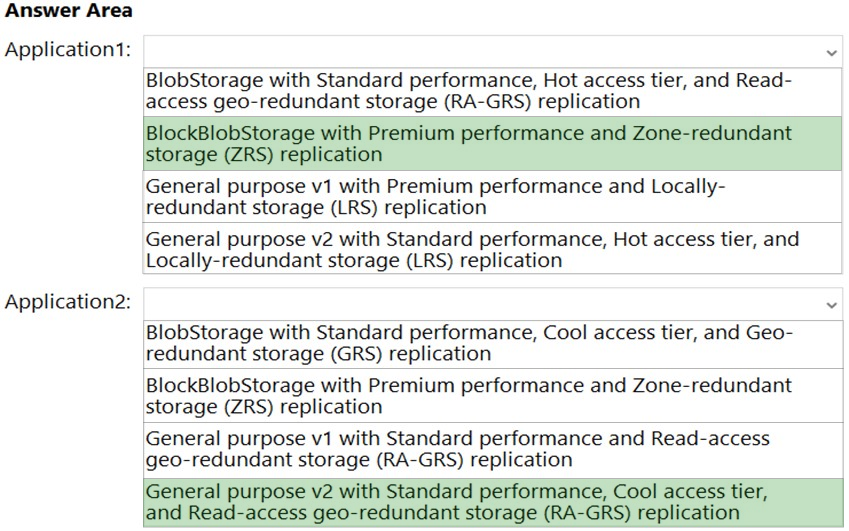

HOTSPOT -

You need to recommend an Azure Storage account configuration for two applications named Application1 and Application2. The configuration must meet the following requirements:

✑ Storage for Application1 must provide the highest possible transaction rates and the lowest possible latency.

✑ Storage for Application2 must provide the lowest possible storage costs per GB.

✑ Storage for both applications must be available in an event of datacenter failure.

✑ Storage for both applications must be optimized for uploads and downloads.

What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: BlobStorage with Premium Performance,ג€¦

Application1 requires high transaction rates and the lowest possible latency. We need to use Premium, not Standard.

Box 2: General purpose v2 with Standard Performance,..

General Purpose v2 provides access to the latest Azure storage features, including Cool and Archive storage, with pricing optimized for the lowest GB storage prices. These accounts provide access to Block Blobs, Page Blobs, Files, and Queues. Recommended for most scenarios using Azure Storage.

Reference:

https://docs.microsoft.com/en-us/azure/storage/common/storage-account-upgrade

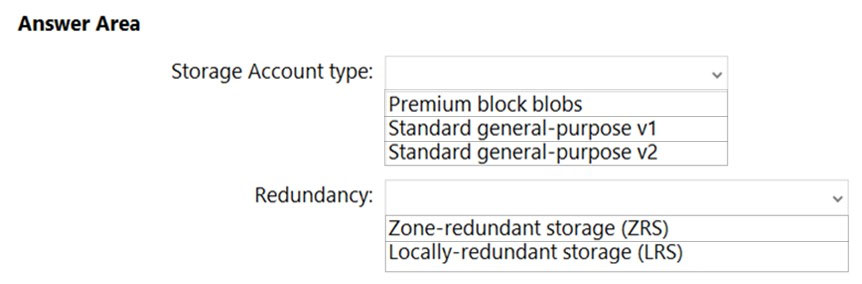

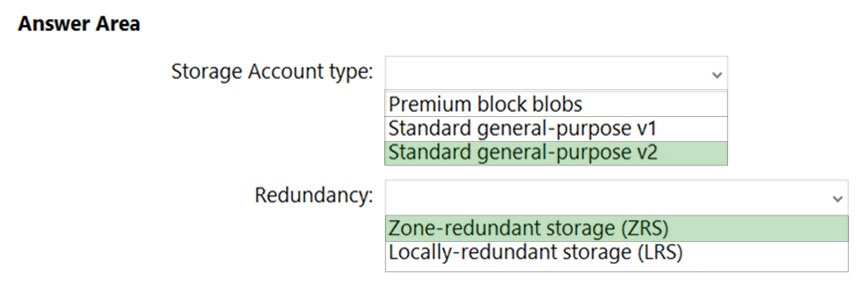

HOTSPOT -

You plan to develop a new app that will store business critical data. The app must meet the following requirements:

✑ Prevent new data from being modified for one year.

✑ Maximize data resiliency.

✑ Minimize read latency.

What storage solution should you recommend for the app? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: Standard general-purpose v2

Standard general-purpose v2 supports immutable storage.

In general Standard general-purpose v2 is the preferred Microsoft recommendation.

Box 2: Zone-redundant storage (ZRS)

ZRS is more resilient compared to LRS.

Note: RA-GRS is even more resilient, but it is not an option here.

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blob-immutable-storage

You plan to deploy 10 applications to Azure. The applications will be deployed to two Azure Kubernetes Service (AKS) clusters. Each cluster will be deployed to a separate Azure region.

The application deployment must meet the following requirements:

✑ Ensure that the applications remain available if a single AKS cluster fails.

✑ Ensure that the connection traffic over the internet is encrypted by using SSL without having to configure SSL on each container.

Which service should you include in the recommendation?

Answer:

A

Azure Front Door supports SSL.

Azure Front Door, which focuses on global load-balancing and site acceleration, and Azure CDN Standard, which offers static content caching and acceleration.

The new Azure Front Door brings together security with CDN technology for a cloud-based CDN with threat protection and additional capabilities.

Reference:

https://docs.microsoft.com/en-us/azure/frontdoor/front-door-overview