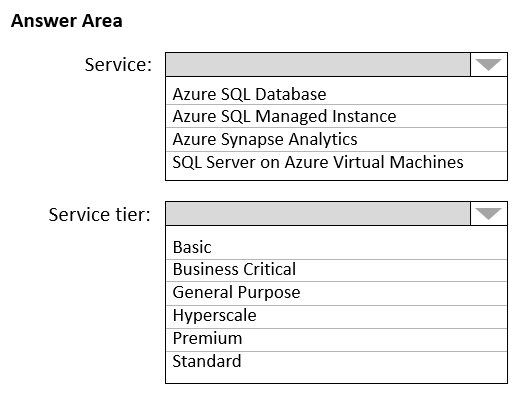

HOTSPOT -

You have an on-premises database that you plan to migrate to Azure.

You need to design the database architecture to meet the following requirements:

✑ Support scaling up and down.

✑ Support geo-redundant backups.

✑ Support a database of up to 75 TB.

✑ Be optimized for online transaction processing (OLTP).

What should you include in the design? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: Azure SQL Database -

Azure SQL Database:

Database size always depends on the underlying service tiers (e.g. Basic, Business Critical, Hyperscale).

It supports databases of up to 100 TB with Hyperscale service tier model.

Active geo-replication is a feature that lets you to create a continuously synchronized readable secondary database for a primary database. The readable secondary database may be in the same Azure region as the primary, or, more commonly, in a different region. This kind of readable secondary databases are also known as geo-secondaries, or geo-replicas.

Azure SQL Database and SQL Managed Instance enable you to dynamically add more resources to your database with minimal downtime.

Box 2: Hyperscale -

Incorrect Answers:

✑ SQL Server on Azure VM: geo-replication not supported.

✑ Azure Synapse Analytics is not optimized for online transaction processing (OLTP).

✑ Azure SQL Managed Instance max database size is up to currently available instance size (depending on the number of vCores).

Max instance storage size (reserved) - 2 TB for 4 vCores

- 8 TB for 8 vCores

- 16 TB for other sizes

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/active-geo-replication-overview https://medium.com/awesome-azure/azure-difference-between-azure-sql-database-and-sql-server-on-vm-comparison-azure-sql-vs-sql-server-vm-cf02578a1188

You are planning an Azure IoT Hub solution that will include 50,000 IoT devices.

Each device will stream data, including temperature, device ID, and time data. Approximately 50,000 records will be written every second. The data will be visualized in near real time.

You need to recommend a service to store and query the data.

Which two services can you recommend? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

Answer:

CD

D: Time Series Insights is a fully managed service for time series data. In this architecture, Time Series Insights performs the roles of stream processing, data store, and analytics and reporting. It accepts streaming data from either IoT Hub or Event Hubs and stores, processes, analyzes, and displays the data in near real time.

C: The processed data is stored in an analytical data store, such as Azure Data Explorer, HBase, Azure Cosmos DB, Azure Data Lake, or Blob Storage.

Reference:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/scenarios/time-series

You are designing an application that will aggregate content for users.

You need to recommend a database solution for the application. The solution must meet the following requirements:

✑ Support SQL commands.

✑ Support multi-master writes.

✑ Guarantee low latency read operations.

What should you include in the recommendation?

Answer:

A

With Cosmos DB's novel multi-region (multi-master) writes replication protocol, every region supports both writes and reads. The multi-region writes capability also enables:

Unlimited elastic write and read scalability.

99.999% read and write availability all around the world.

Guaranteed reads and writes served in less than 10 milliseconds at the 99th percentile.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/distribute-data-globally

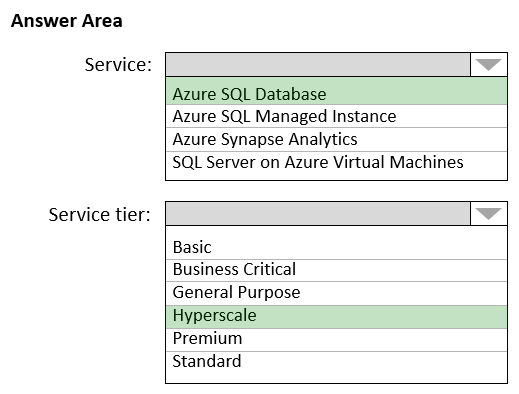

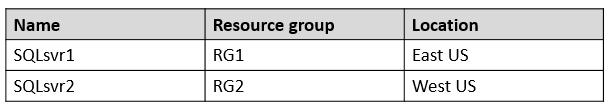

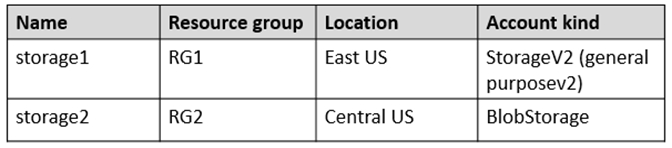

HOTSPOT -

You have an Azure subscription that contains the SQL servers on Azure shown in the following table.

The subscription contains the storage accounts shown in the following table.

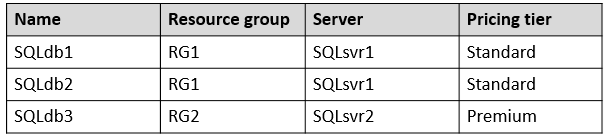

You create the Azure SQL databases shown in the following table.

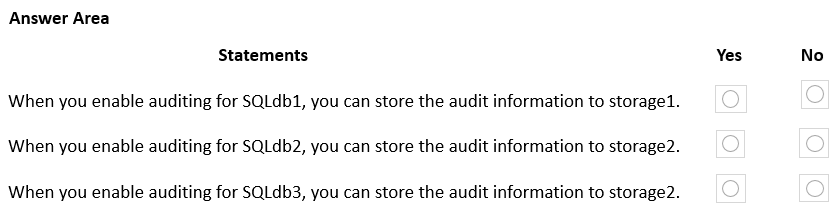

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: Yes -

Auditing works fine for a Standard account.

Box 2: No -

Auditing limitations: Premium storage is currently not supported.

Box 3: No -

Auditing limitations: Premium storage is currently not supported.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/auditing-overview#auditing-limitations

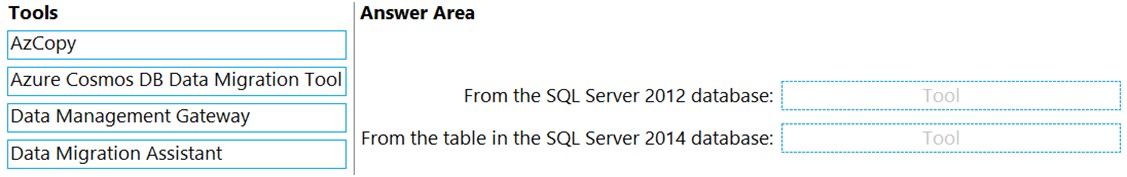

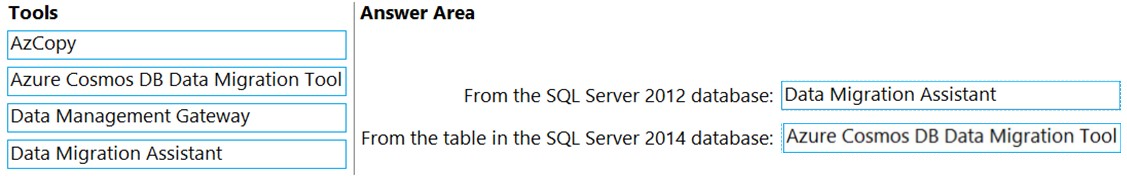

DRAG DROP -

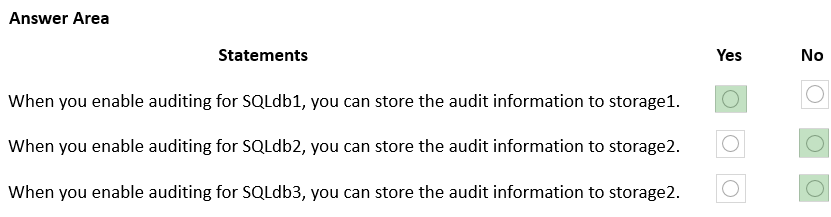

You plan to import data from your on-premises environment to Azure. The data is shown in the following table.

What should you recommend using to migrate the data? To answer, drag the appropriate tools to the correct data sources. Each tool may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

Answer:

Box 1: Data Migration Assistant -

The Data Migration Assistant (DMA) helps you upgrade to a modern data platform by detecting compatibility issues that can impact database functionality in your new version of SQL Server or Azure SQL Database. DMA recommends performance and reliability improvements for your target environment and allows you to move your schema, data, and uncontained objects from your source server to your target server.

Incorrect:

AzCopy is a command-line utility that you can use to copy blobs or files to or from a storage account.

Box 2: Azure Cosmos DB Data Migration Tool

Azure Cosmos DB Data Migration Tool can used to migrate a SQL Server Database table to Azure Cosmos.

Reference:

https://docs.microsoft.com/en-us/sql/dma/dma-overview

https://docs.microsoft.com/en-us/azure/cosmos-db/cosmosdb-migrationchoices

You store web access logs data in Azure Blob Storage.

You plan to generate monthly reports from the access logs.

You need to recommend an automated process to upload the data to Azure SQL Database every month.

What should you include in the recommendation?

Answer:

D

You can create Data Factory pipelines that copies data from Azure Blob Storage to Azure SQL Database. The configuration pattern applies to copying from a file- based data store to a relational data store.

Required steps:

Create a data factory.

Create Azure Storage and Azure SQL Database linked services.

Create Azure Blob and Azure SQL Database datasets.

Create a pipeline contains a Copy activity.

Start a pipeline run.

Monitor the pipeline and activity runs.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/tutorial-copy-data-dot-net

You have an Azure subscription.

Your on-premises network contains a file server named Server1. Server1 stores 5 ׀¢׀’ of company files that are accessed rarely.

You plan to copy the files to Azure Storage.

You need to implement a storage solution for the files that meets the following requirements:

✑ The files must be available within 24 hours of being requested.

✑ Storage costs must be minimized.

Which two possible storage solutions achieve this goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

Answer:

AD

To minimize costs: The Archive tier is optimized for storing data that is rarely accessed and stored for at least 180 days with flexible latency requirements (on the order of hours).

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-blob-storage-tiers

You have an app named App1 that uses two on-premises Microsoft SQL Server databases named DB1 and DB2.

You plan to migrate DB1 and DB2 to Azure

You need to recommend an Azure solution to host DB1 and DB2. The solution must meet the following requirements:

✑ Support server-side transactions across DB1 and DB2.

✑ Minimize administrative effort to update the solution.

What should you recommend?

Answer:

B

Elastic database transactions for Azure SQL Database and Azure SQL Managed Instance allow you to run transactions that span several databases.

SQL Managed Instance enables system administrators to spend less time on administrative tasks because the service either performs them for you or greatly simplifies those tasks.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/elastic-transactions-overview?view=azuresql

You need to design a highly available Azure SQL database that meets the following requirements:

✑ Failover between replicas of the database must occur without any data loss.

✑ The database must remain available in the event of a zone outage.

✑ Costs must be minimized.

Which deployment option should you use?

Answer:

B

Azure SQL Database Premium tier supports multiple redundant replicas for each database that are automatically provisioned in the same datacenter within a region. This design leverages the SQL Server AlwaysON technology and provides resilience to server failures with 99.99% availability SLA and RPO=0.

With the introduction of Azure Availability Zones, we are happy to announce that SQL Database now offers built-in support of Availability Zones in its Premium service tier.

Incorrect:

Not A: Hyperscale is more expensive than Premium.

Not C: Need Premium for Availability Zones.

Not D: Zone redundant configuration that is free on Azure SQL Premium is not available on Azure SQL Managed Instance.

Reference:

https://azure.microsoft.com/en-us/blog/azure-sql-database-now-offers-zone-redundant-premium-databases-and-elastic-pools/

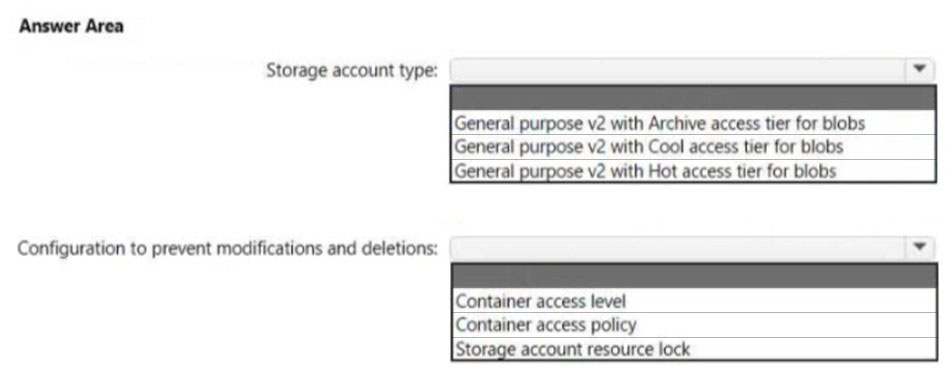

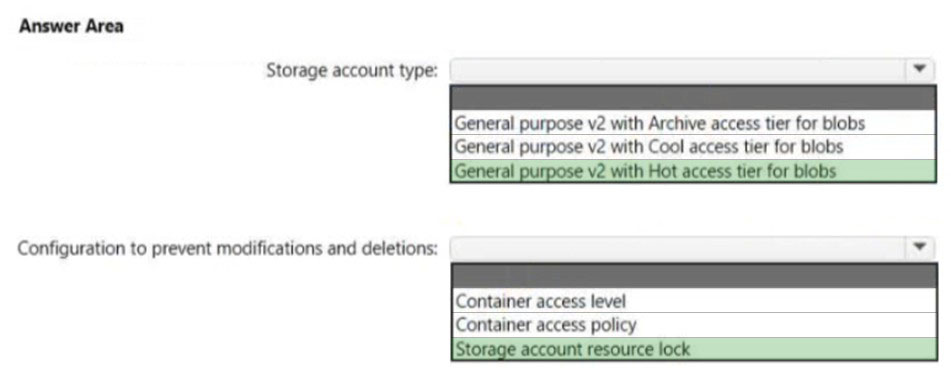

HOTSPOT -

You are planning an Azure Storage solution for sensitive data. The data will be accessed daily. The dataset is less than 10 GB.

You need to recommend a storage solution that meets the following requirements:

✑ All the data written to storage must be retained for five years.

✑ Once the data is written, the data can only be read. Modifications and deletion must be prevented.

✑ After five years, the data can be deleted, but never modified.

✑ Data access charges must be minimized.

What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: General purpose v2 with Hot access tier for blobs

Note:

* All the data written to storage must be retained for five years.

* Data access charges must be minimized

Hot tier has higher storage costs, but lower access and transaction costs.

Incorrect:

Not Archive: Lowest storage costs, but highest access, and transaction costs.

Not Cool: Lower storage costs, but higher access and transaction costs.

Box 2: Storage account resource lock

As an administrator, you can lock a subscription, resource group, or resource to prevent other users in your organization from accidentally deleting or modifying critical resources. The lock overrides any permissions the user might have.

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/access-tiers-overview https://docs.microsoft.com/en-us/azure/azure-resource-manager/management/lock-resources