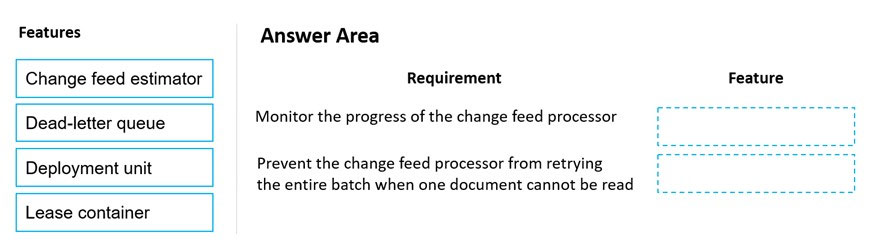

DRAG DROP -

You are implementing an Azure solution that uses Azure Cosmos DB and the latest Azure Cosmos DB SDK. You add a change feed processor to a new container instance.

You attempt to read a batch of 100 documents. The process fails when reading one of the documents. The solution must monitor the progress of the change feed processor instance on the new container as the change feed is read. You must prevent the change feed processor from retrying the entire batch when one document cannot be read.

You need to implement the change feed processor to read the documents.

Which features should you use? To answer, drag the appropriate features to the cored requirements. Each feature may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each cored selection is worth one point.

Select and Place:

Answer:

Box 1: Change feed estimator -

You can use the change feed estimator to monitor the progress of your change feed processor instances as they read the change feed or use the life cycle notifications to detect underlying failures.

Box 2: Dead-letter queue -

To prevent your change feed processor from getting "stuck" continuously retrying the same batch of changes, you should add logic in your delegate code to write documents, upon exception, to a dead-letter queue. This design ensures that you can keep track of unprocessed changes while still being able to continue to process future changes. The dead-letter queue might be another Cosmos container. The exact data store does not matter, simply that the unprocessed changes are persisted.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/change-feed-processor

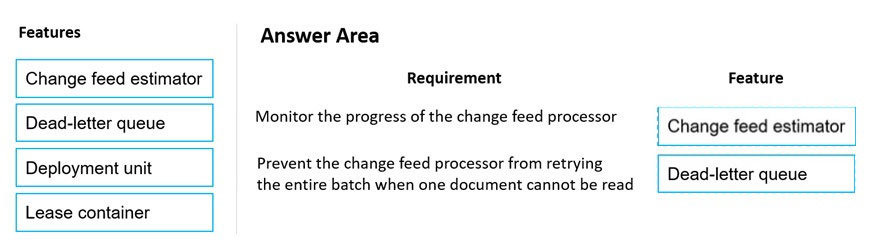

HOTSPOT -

You are developing an application that uses a premium block blob storage account. The application will process a large volume of transactions daily. You enable

Blob storage versioning.

You are optimizing costs by automating Azure Blob Storage access tiers. You apply the following policy rules to the storage account. (Line numbers are included for reference only.)

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: No -

Would be true if daysAfterModificationGreaterThan was used, but here daysAfterCreationGreaterThan

Box 2: No -

Would need to use the daysAfterLastAccessTimeGreaterThan predicate.

Box 3: Yes -

Box 4: Yes -

With the lifecycle management policy, you can:

Transition blobs from cool to hot immediately when they are accessed, to optimize for performance.

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/lifecycle-management-overview

An organization deploys Azure Cosmos DB.

You need to ensure that the index is updated as items are created, updated, or deleted.

What should you do?

Answer:

D

Azure Cosmos DB supports two indexing modes:

Consistent: The index is updated synchronously as you create, update or delete items. This means that the consistency of your read queries will be the consistency configured for the account.

None: Indexing is disabled on the container.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/index-policy

You are developing a .Net web application that stores data in Azure Cosmos DB. The application must use the Core API and allow millions of reads and writes.

The Azure Cosmos DB account has been created with multiple write regions enabled. The application has been deployed to the East US2 and Central US regions.

You need to update the application to support multi-region writes.

What are two possible ways to achieve this goal? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

Answer:

CD

C: The UseMultipleWriteLocations of the ConnectionPolicy class gets or sets the flag to enable writes on any locations (regions) for geo-replicated database accounts in the Azure Cosmos DB service.

Note: Once an account has been created with multiple write regions enabled, you must make two changes in your application to the ConnectionPolicy for the

Cosmos client to enable the multi-region writes in Azure Cosmos DB. Within the ConnectionPolicy, set UseMultipleWriteLocations to true and pass the name of the region where the application is deployed to ApplicationRegion. This will populate the PreferredLocations property based on the geo-proximity from location passed in. If a new region is later added to the account, the application does not have to be updated or redeployed, it will automatically detect the closer region and will auto-home on to it should a regional event occur.

Azure core API application " ConnectionPolicy class" cosmos db multiple write regions enabled

D: With multi-region writes, when multiple clients write to the same item, conflicts may occur. When a conflict occurs, you can resolve the conflict by using different conflict resolution policies.

Note: Conflict resolution policy can only be specified at container creation time and cannot be modified after container creation.

Reference:

https://docs.microsoft.com/en-us/dotnet/api/microsoft.azure.documents.client.connectionpolicy https://docs.microsoft.com/en-us/azure/cosmos-db/sql/how-to-multi-master https://docs.microsoft.com/en-us/azure/cosmos-db/sql/how-to-manage-conflicts

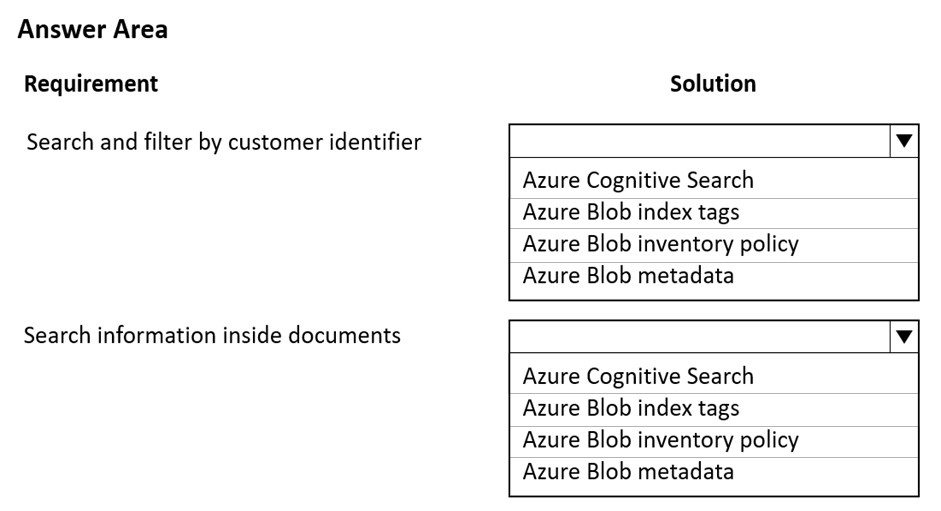

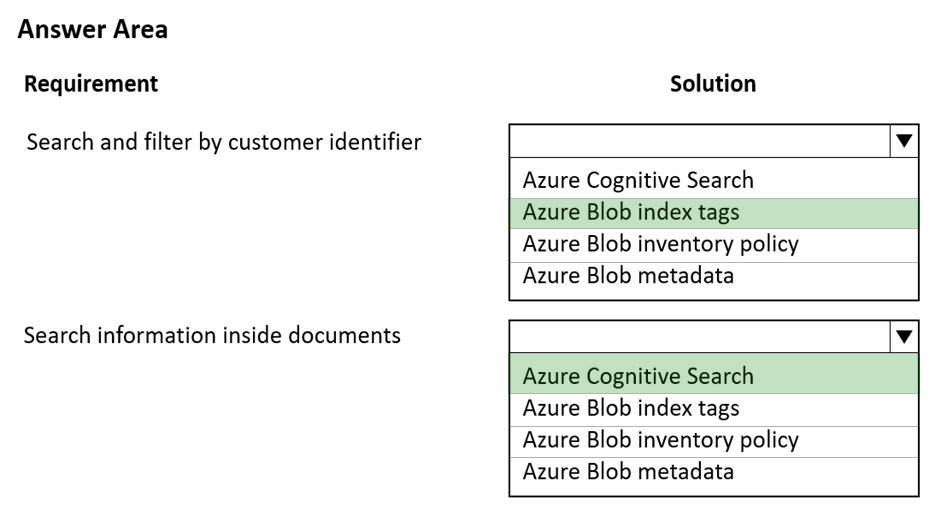

HOTSPOT -

You are developing a solution to store documents in Azure Blob storage. Customers upload documents to multiple containers. Documents consist of PDF, CSV,

Microsoft Office format and plain text files.

The solution must process millions of documents across hundreds of containers. The solution must meet the following requirements:

✑ Documents must be categorized by a customer identifier as they are uploaded to the storage account.

✑ Allow filtering by the customer identifier.

✑ Allow searching of information contained within a document

✑ Minimize costs.

You create and configure a standard general-purpose v2 storage account to support the solution.

You need to implement the solution.

What should you implement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: Azure Blob index tags -

As datasets get larger, finding a specific object in a sea of data can be difficult. Blob index tags provide data management and discovery capabilities by using key- value index tag attributes. You can categorize and find objects within a single container or across all containers in your storage account. As data requirements change, objects can be dynamically categorized by updating their index tags. Objects can remain in-place with their current container organization.

Box 2: Azure Cognitive Search -

Only index tags are automatically indexed and made searchable by the native Blob Storage service. Metadata can't be natively indexed or searched. You must use a separate service such as Azure Search.

Azure Cognitive Search is the only cloud search service with built-in AI capabilities that enrich all types of information to help you identify and explore relevant content at scale. Use cognitive skills for vision, language, and speech, or use custom machine learning models to uncover insights from all types of content.

Reference:

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-manage-find-blobs https://azure.microsoft.com/en-us/services/search/

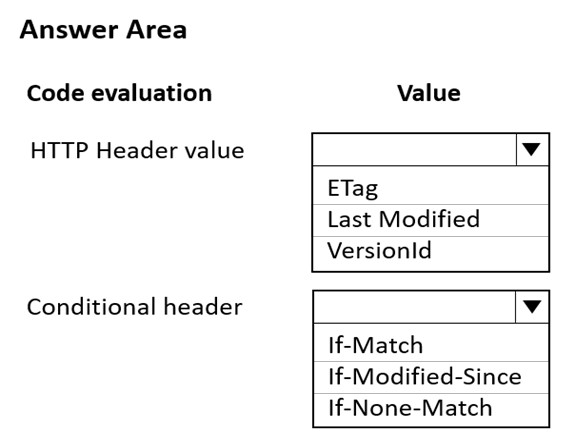

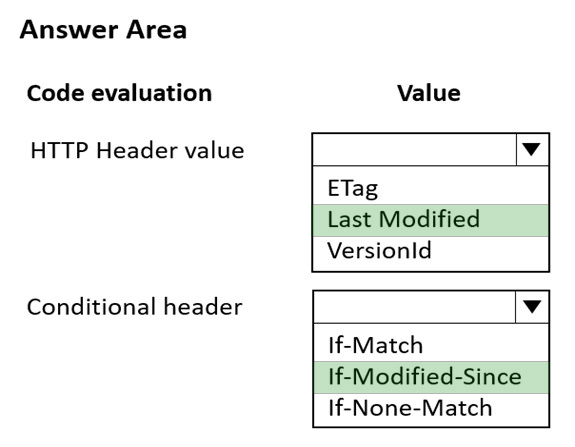

HOTSPOT -

You are developing a web application by using the Azure SDK. The web application accesses data in a zone-redundant BlockBlobStorage storage account.

The application must determine whether the data has changed since the application last read the data. Update operations must use the latest data changes when writing data to the storage account.

You need to implement the update operations.

Which values should you use? To answer, select the appropriate option in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: Last Modified -

The Last-Modified response HTTP header contains a date and time when the origin server believes the resource was last modified. It is used as a validator to determine if the resource is the same as the previously stored one. Less accurate than an ETag header, it is a fallback mechanism.

Box 2: If-Modified-Since -

Conditional Header If-Modified-Since:

A DateTime value. Specify this header to perform the operation only if the resource has been modified since the specified time.

Incorrect:

Not ETag/If-Match -

Conditional Header If-Match:

An ETag value. Specify this header to perform the operation only if the resource's ETag matches the value specified. For versions 2011-08-18 and newer, the

ETag can be specified in quotes.

Reference:

https://developer.mozilla.org/en-US/docs/Web/HTTP/Headers/Last-Modified https://docs.microsoft.com/en-us/rest/api/storageservices/specifying-conditional-headers-for-blob-service-operations

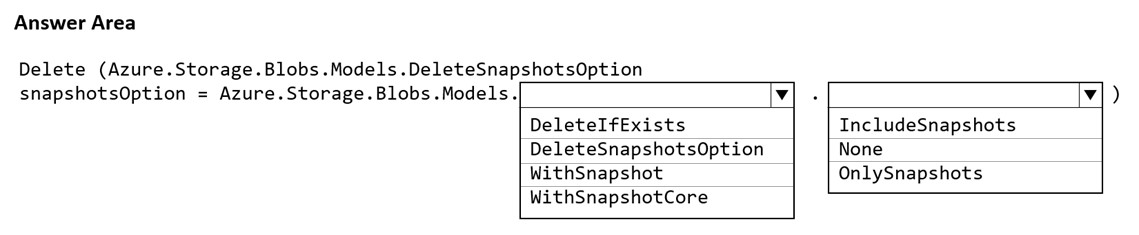

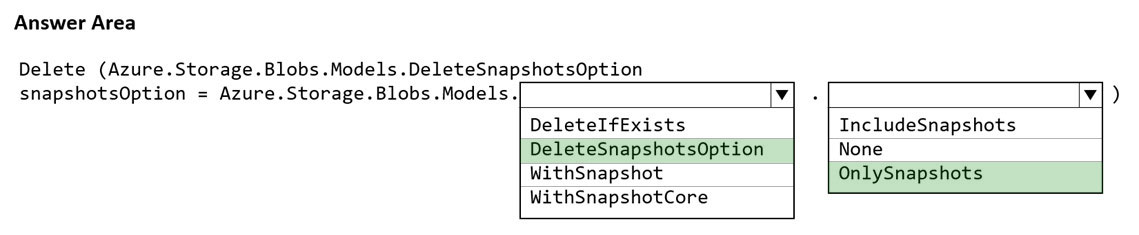

HOTSPOT -

An organization deploys a blob storage account. Users take multiple snapshots of the blob storage account over time.

You need to delete all snapshots of the blob storage account. You must not delete the blob storage account itself.

How should you complete the code segment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: DeleteSnapshotsOption -

Sample code in powershell:

//dont forget to add the include snapshots :)

await batchClient.DeleteBlobsAsync(listofURIforBlobs,

Azure.Storage.Blobs.Models.DeleteSnapshotsOption.IncludeSnapshots);

Sample code in .Net:

// Create a batch with three deletes

BlobBatchClient batchClient = service.GetBlobBatchClient();

BlobBatch batch = batchClient.CreateBatch();

batch.DeleteBlob(foo.Uri, DeleteSnapshotsOption.IncludeSnapshots); batch.DeleteBlob(bar.Uri, DeleteSnapshotsOption.OnlySnapshots); batch.DeleteBlob(baz.Uri);

// Submit the batch

batchClient.SubmitBatch(batch);

Box 2: OnlySnapshots -

Reference:

https://docs.microsoft.com/en-us/dotnet/api/overview/azure/storage.blobs.batch-readme https://stackoverflow.com/questions/39471212/programmatically-delete-azure-blob-storage-objects-in-bulks

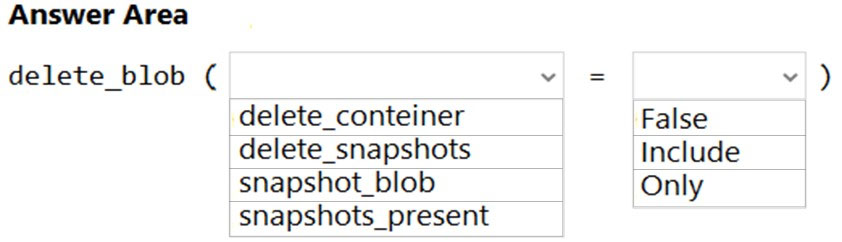

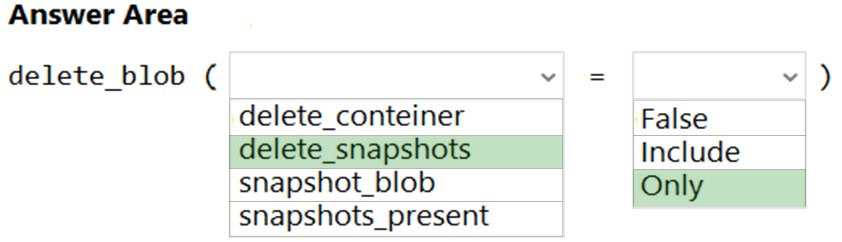

HOTSPOT -

An organization deploys a blob storage account. Users take multiple snapshots of the blob storage account over time.

You need to delete all snapshots of the blob storage account. You must not delete the blob storage account itself.

How should you complete the code segment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: delete_snapshots -

# Delete only the snapshot (blob itself is retained)

blob_client.delete_blob(delete_snapshots="only")

Box 2: only -

Reference:

https://github.com/Azure/azure-sdk-for-python/blob/main/sdk/storage/azure-storage-blob/samples/blob_samples_common.py

HOTSPOT

-

You are developing an application that monitors data added to an Azure Blob storage account.

You need to process each change made to the storage account.

How should you complete the code segment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer:

HOTSPOT

-

You develop an application that sells AI generated images based on user input. You recently started a marketing campaign that displays unique ads every second day.

Sales data is stored in Azure Cosmos DB with the date of each sale being stored in a property named ‘whenFinished’.

The marketing department requires a view that shows the number of sales for each unique ad.

You need to implement the query for the view.

How should you complete the query? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer: