You have an app named App1 that uses two on-premises Microsoft SQL Server databases named DB1 and DB2.

You plan to migrate DB1 and DB2 to Azure.

You need to recommend an Azure solution to host DB1 and DB2. The solution must meet the following requirements:

✑ Support server-side transactions across DB1 and DB2.

✑ Minimize administrative effort to update the solution.

What should you recommend?

Answer:

C

SQL Managed Instance enables system administrators to spend less time on administrative tasks because the service either performs them for you or greatly simplifies those tasks.

Note: Azure SQL Managed Instance is designed for customers looking to migrate a large number of apps from an on-premises or IaaS, self-built, or ISV provided environment to a fully managed PaaS cloud environment, with as low a migration effort as possible. Using the fully automated Azure Data Migration Service, customers can lift and shift their existing SQL Server instance to SQL Managed Instance, which offers compatibility with SQL Server and complete isolation of customer instances with native VNet support. With Software Assurance, you can exchange your existing licenses for discounted rates on SQL Managed Instance using the Azure Hybrid Benefit for SQL Server. SQL Managed Instance is the best migration destination in the cloud for SQL Server instances that require high security and a rich programmability surface.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/managed-instance/sql-managed-instance-paas-overview

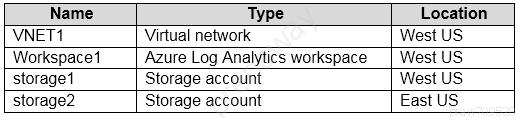

You have an Azure subscription that contains the resources shown in the following table.

You need to archive the diagnostic data for VNET1 for 365 days. The solution must minimize costs.

Where should you archive the data?

Answer:

B

Incorrect Answers:

A: When you create a new workspace, it automatically creates several Azure resources that are used by the workspace:

✑ Azure Storage account: Is used as the default datastore for the workspace.

Note: The workspace is the top-level resource for Azure Machine Learning, providing a centralized place to work with all the artifacts you create when you use

Azure Machine Learning.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/concept-workspace

You plan to create an Azure Cosmos DB account that uses the SQL API. The account will contain data added by a web application. The web application will send data daily.

You need to recommend a notification solution that meets the following requirements:

✑ Sends email notifications when data is received from the web application

✑ Minimizes compute cost

What should you include in the recommendation?

Answer:

C

You can send email by using SendGrid bindings in Azure Functions. Azure Functions supports an output binding for SendGrid.

Note: When you're using the Consumption plan, instances of the Azure Functions host are dynamically added and removed based on the number of incoming events.

Reference:

https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-sendgrid https://docs.microsoft.com/en-us/azure/azure-functions/functions-scale#consumption-plan

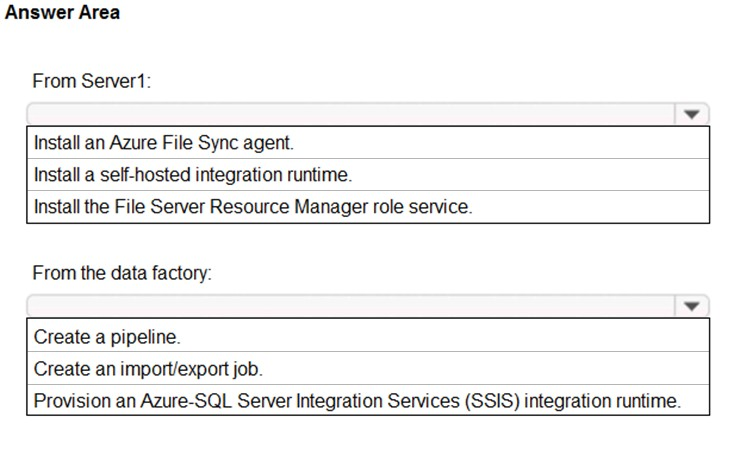

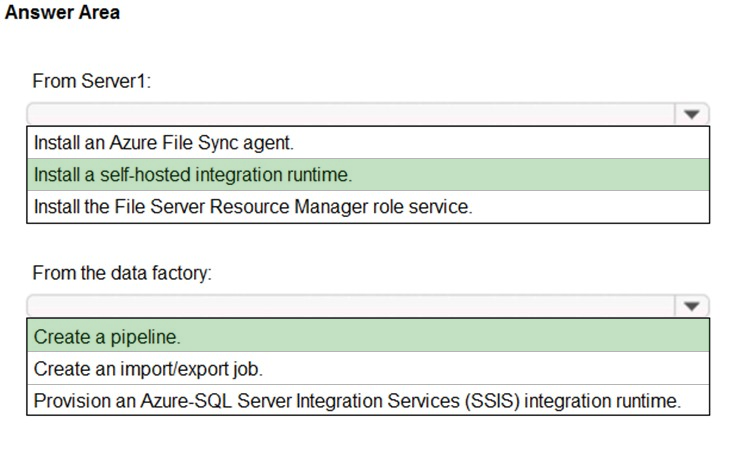

HOTSPOT -

You on-premises network contains a file server named Server1 that stores 500 GB of data.

You need to use Azure Data Factory to copy the data from Server1 to Azure Storage.

You add a new data factory.

What should you do next? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

Box 1: Install a self-hosted integration runtime

The Integration Runtime is a customer-managed data integration infrastructure used by Azure Data Factory to provide data integration capabilities across different network environments.

Box 2: Create a pipeline -

With ADF, existing data processing services can be composed into data pipelines that are highly available and managed in the cloud. These data pipelines can be scheduled to ingest, prepare, transform, analyze, and publish data, and ADF manages and orchestrates the complex data and processing dependencies

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/team-data-science-process/move-sql-azure-adf

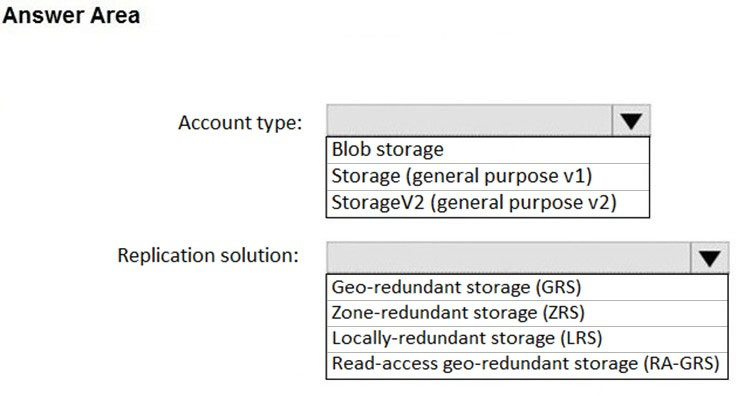

HOTSPOT -

You have an on-premises file server that stores 2 TB of data files.

You plan to move the data files to Azure Blob storage in the Central Europe region.

You need to recommend a storage account type to store the data files and a replication solution for the storage account. The solution must meet the following requirements:

✑ Be available if a single Azure datacenter fails.

✑ Support storage tiers.

✑ Minimize cost.

What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

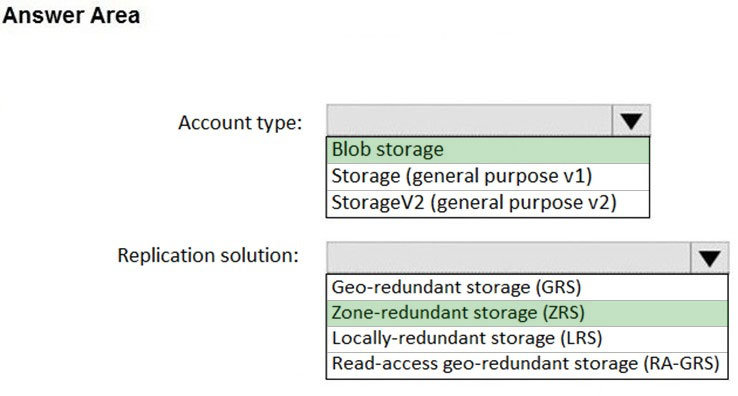

Answer:

Box 1: Blob storage -

Blob storage supports storage tiers

Note: Azure offers three storage tiers to store data in blob storage: Hot Access tier, Cool Access tier, and Archive tier. These tiers target data at different stages of its lifecycle and offer cost-effective storage options for different use cases.

Box 2: Zone-redundant storage (ZRS)

Data in an Azure Storage account is always replicated three times in the primary region. Azure Storage offers two options for how your data is replicated in the primary region:

✑ Zone-redundant storage (ZRS) copies your data synchronously across three Azure availability zones in the primary region.

✑ Locally redundant storage (LRS) copies your data synchronously three times within a single physical location in the primary region. LRS is the least expensive replication option, but is not recommended for applications requiring high availability.

Reference:

https://cloud.netapp.com/blog/storage-tiers-in-azure-blob-storage-find-the-best-for-your-data https://docs.microsoft.com/en-us/azure/storage/common/storage-redundancy

A company has a hybrid ASP.NET Web API application that is based on a software as a service (SaaS) offering.

Users report general issues with the data. You advise the company to implement live monitoring and use ad hoc queries on stored JSON data. You also advise the company to set up smart alerting to detect anomalies in the data.

You need to recommend a solution to set up smart alerting.

What should you recommend?

Answer:

C

Application Insights, a feature of Azure Monitor, is an extensible Application Performance Management (APM) service for developers and DevOps professionals.

Use it to monitor your live applications. It will automatically detect performance anomalies, and includes powerful analytics tools to help you diagnose issues and to understand what users actually do with your app.

Reference:

https://docs.microsoft.com/en-us/azure/azure-monitor/app/app-insights-overview

You have an Azure subscription that is linked to an Azure Active Directory (Azure AD) tenant. The subscription contains 10 resource groups, one for each department at your company.

Each department has a specific spending limit for its Azure resources.

You need to ensure that when a department reaches its spending limit, the compute resources of the department shut down automatically.

Which two features should you include in the solution? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

Answer:

CD

C: The spending limit in Azure prevents spending over your credit amount. All new customers who sign up for an Azure free account or subscription types that include credits over multiple months have the spending limit turned on by default. The spending limit is equal to the amount of credit and it can't be changed.

D: Turn on the spending limit after removing

This feature is available only when the spending limit has been removed indefinitely for subscription types that include credits over multiple months. You can use this feature to turn on your spending limit automatically at the start of the next billing period.

1. Sign in to the Azure portal as the Account Administrator.

2. Search for Cost Management + Billing.

3. Etc.

Reference:

https://docs.microsoft.com/en-us/azure/cost-management-billing/manage/spending-limit

HOTSPOT -

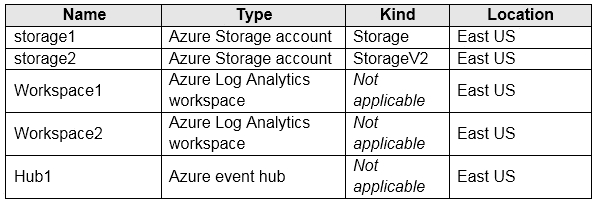

You have an Azure subscription that contains the resources shown in the following table.

You create an Azure SQL database named DB1 that is hosted in the East US region.

To DB1, you add a diagnostic setting named Settings1. Settings1 archives SQLInsights to storage1 and sends SQLInsights to Workspace1.

For each of the following statements, select Yes if the statement is true, Otherwise, select No.

Hot Area:

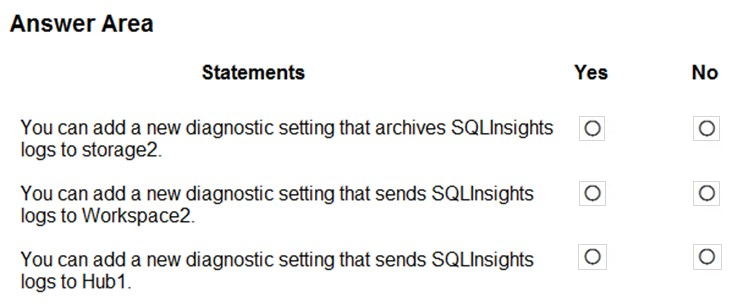

Answer:

Box 1: No -

You archive logs only to Azure Storage accounts.

Box 2: Yes -

Box 3: Yes -

Sending logs to Event Hubs allows you to stream data to external systems such as third-party SIEMs and other log analytics solutions.

Note: A single diagnostic setting can define no more than one of each of the destinations. If you want to send data to more than one of a particular destination type

(for example, two different Log Analytics workspaces), then create multiple settings. Each resource can have up to 5 diagnostic settings.

Reference:

https://docs.microsoft.com/en-us/azure/azure-monitor/platform/diagnostic-settings

HOTSPOT -

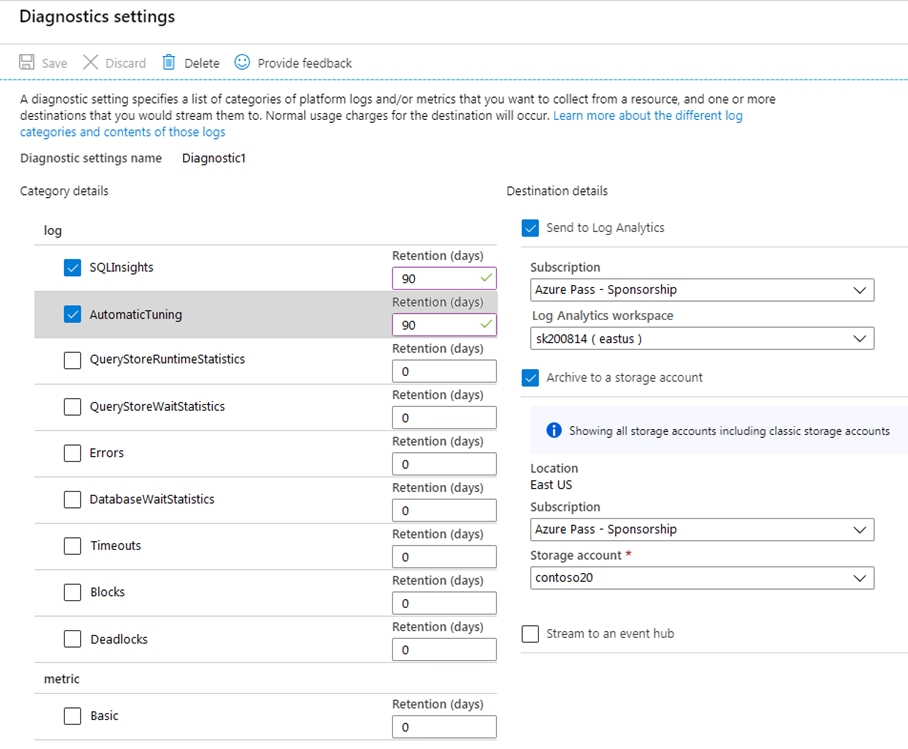

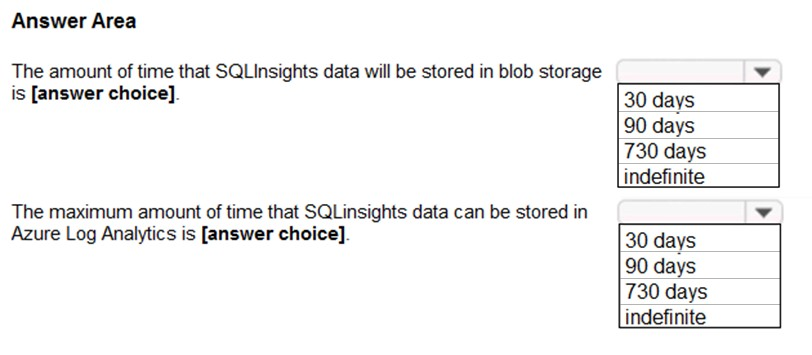

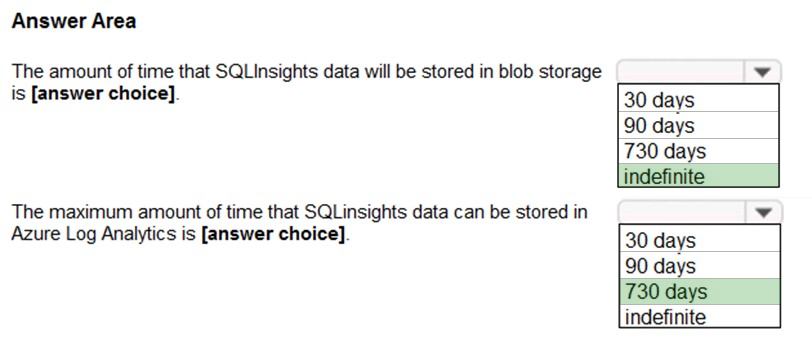

You deploy several Azure SQL Database instances.

You plan to configure the Diagnostics settings on the databases as shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

Hot Area:

Answer:

In the exhibit, the SQLInsights data is configured to be stored in Azure Log Analytics for 90 days. However, the question is asking for the ג€maximumג€ amount of time that the data can be stored which is 730 days.

Your company uses Microsoft System Center Service Manager on its on-premises network.

You plan to deploy several services to Azure.

You need to recommend a solution to push Azure service health alerts to Service Manager.

What should you include in the recommendation?

Answer:

A

Reference:

https://docs.microsoft.com/en-us/azure/azure-monitor/platform/itsmc-overview